CMU Releases New Tool for Experimenting with Text-to-Image Generative AI

Mar 25, 2024

Carnegie Mellon University graduate student Adithya Kameswara Rao and his advisor, David Touretzky, have released a new educational tool for experimenting with text-to-image generation. The tool, called DiffusionDemo, is built on Stable Diffusion, a popular open source model from Stability.ai that generates novel images given a textual description. Other models in this class include DALL-E, Imagen, and Midjourney.

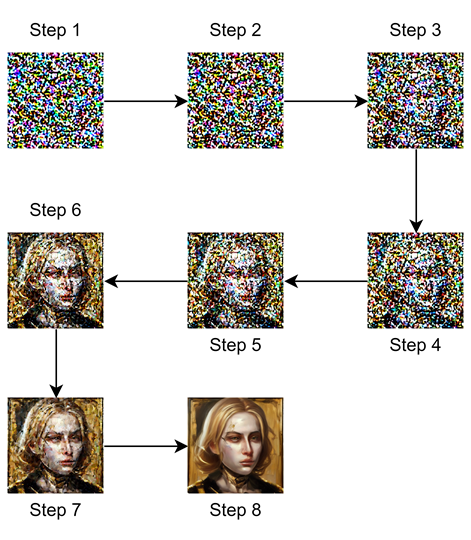

DiffusionDemo uses a scaled-down version of Stable Diffusion that trades image quality for speed, allowing rapid experimentation with different prompts and parameter settings. The demo includes ten panels that support different experiments or views of the image generation process. The key educational goal is to help people understand the notion of "latent space", a mathematical construct in which images are described. Nearby points in latent space describe similar images. A neural network called a "decoder" maps these descriptions into actual images.

DiffusionDemo was developed with funding from NEOM Company and the AI-CARING AI Institute (NSF award IIS-2112633).

DiffusionDemo can be accessed at https://github.com/touretzkyds/DiffusionDemo